Table of Contents

- What is ML testing?

- Types of ML Testing

- Evaluation Metrics for ML Models

- How to Test Machine Learning Models?

- Ethical Considerations in ML Testing

- Tools and Frameworks for ML Testing

- Conclusion

- Why Choose TestingXperts for ML Testing?

From smart assistants making our lives easier to sophisticated algorithms detecting medical conditions, the applications of machine learning technology are noticeable. Yet, as we increasingly rely on these algorithms, a question arises: how can we trust them?

Unlike traditional software, which follows explicit instructions, ML algorithms learn from data, drawing patterns and making decisions. This learning paradigm, while highly intelligent, introduces complexities. If traditional software fails, it’s often due to a coding error – a logical misstep. But when an ML model fails, it could be due to various reasons:

• A bias in the training data

• An overfitting bugs

• An unforeseen integration of variables

As ML models are involved in critical decision-making like approving loans, steering autonomous vehicles, or diagnosing patients, there could be chances of errors. This is why ML testing is a crucial process that every business needs to implement. It ensures that the ML models operate responsibly, accurately, and ethically.

What is ML testing?

Machine learning testing is the process of evaluating and validating the performance of machine learning models to ensure their correctness, accuracy, and robustness. Unlike traditional software testing, which mainly focuses on code functionality, ML testing includes additional layers due to the inherent complexity of ML models. It ensures that ML models perform as intended, providing reliable results and adhering to industry standards.

Importance of ML Testing

Maintaining Model Accuracy

ML models are trained on historical data, and their accuracy largely depends on the quality and relevance of this data. ML model testing helps identify bugs between predicted and actual outcomes, allowing developers to fine-tune the model and enhance its accuracy.

Protection Against Bias

Bias in ML models can lead to unfair or discriminatory outcomes. Thorough testing can reveal biases in data and algorithms, enabling developers to address them and create more equitable models.

Adapting to Changing Data

Real-world data is constantly evolving. ML testing ensures that models remain effective as new data is introduced, maintaining their predictive power over time.

Enhancing Reliability

Robust testing procedures strengthen the reliability of ML systems, instilling confidence in their performance and reducing the risk of unexpected failures.

Types of ML Testing

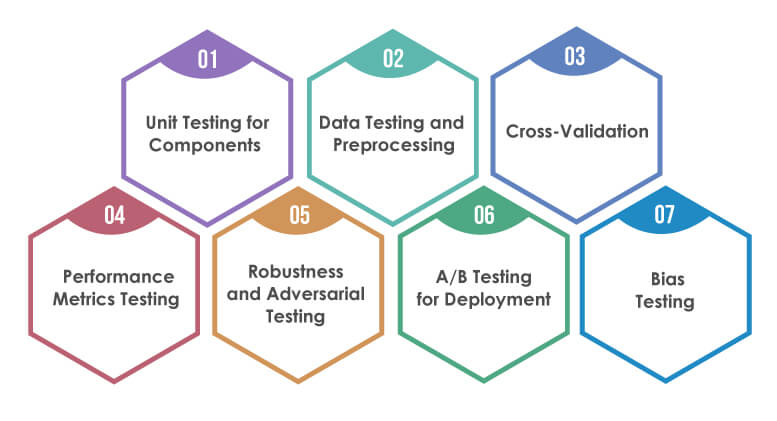

Let us look into various types of ML testing, each meant to address specific aspects of model performance, all while keeping things simple and easy to understand

Unit Testing for Components

Like traditional software testing, unit testing in ML focuses on testing individual components of the ML pipeline. It involves assessing the correctness of each step, from data preprocessing to feature extraction, model architecture, and hyperparameters. Ensuring that each building block functions as expected contributes to the overall reliability of the model.

Data Testing and Preprocessing

The quality of input data impacts the performance of an ML model. Data testing involves verifying the data’s integrity, accuracy, and consistency. This step also includes preprocessing testing to ensure that data transformation, normalisation, and cleaning processes are executed correctly. Clean and reliable data leads to accurate predictions.

Cross-Validation

Cross-validation is a powerful technique for assessing how well an ML model generalises to new, unseen data. It involves partitioning the dataset into multiple subsets, training the model on different subsets, and testing its performance on the remaining data. Cross-validation provides insights into a model’s potential performance on diverse inputs by repeating this process and averaging the results.

Performance Metrics Testing

Choosing appropriate performance metrics is crucial for evaluating model performance. Metrics like accuracy, precision, recall, and F1-score provide quantitative measures of how well the model is doing. Performance Testing metrics ensures that the model delivers results per the intended objectives.

Robustness and Adversarial Testing

Robustness testing involves assessing how well the model handles unexpected inputs or adversarial attacks. Adversarial testing explicitly evaluates the model’s behaviour when exposed to deliberately modified inputs designed to confuse it. Robust models are less likely to make erroneous predictions under challenging conditions.

A/B Testing for Deployment

Once a model is ready for deployment, A/B testing can be employed. It involves deploying the new ML model alongside an existing one and comparing their performance in a real-world setting. A/B testing helps ensure that the new model doesn’t introduce unexpected issues and performs at least as well as the current solution.

Bias Testing

Bias in ML models can lead to unfair or discriminatory outcomes. To tackle this, bias and fairness testing aims to identify and mitigate biases in the data and the ML model’s predictions. It ensures that the model treats all individuals and groups fairly.

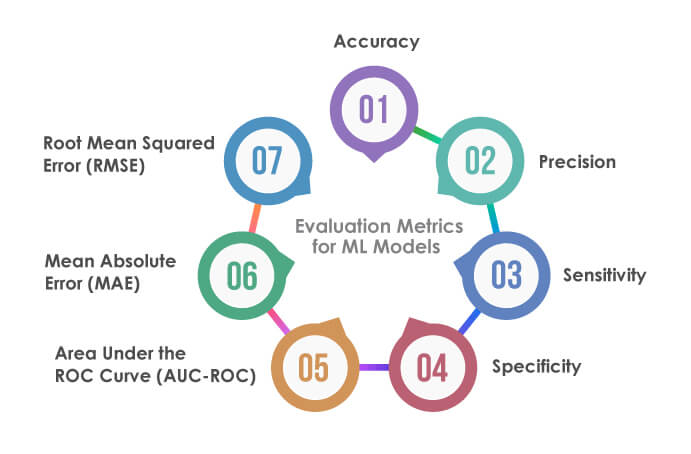

Evaluation Metrics for ML Models

One must rely on evaluation metrics to measure the performance and effectiveness of these models. These metrics provide valuable insights into how well ML models perform, helping fine-tune and optimise them for better results. Let us look into some of the metrics

Accuracy

Accuracy is the most straightforward metric, measuring the ratio of correctly predicted instances to the total instances in the dataset. It provides an overall view of a model’s correctness. However, it might not be the best choice when dealing with imbalanced datasets, where one class dominates the other.

Precision

Precision focuses on the accuracy of positive predictions made by the model. It is the ratio of accurate positive predictions to the sum of true positives and false positives. Precision is valuable when false positives are costly or undesirable.

Sensitivity

Sensitivity, or true positive rate, assesses the model’s ability to capture all positive instances. It is the ratio of true positives to the sum of true positives and false negatives. Recall is crucial when the consequences of false negatives are significant.

Specificity

Specificity, also known as the true negative rate, evaluates a model’s ability to identify negative instances correctly. It’s the ratio of true negatives to the sum of true negatives and false positives. Specificity is valuable when focusing on the performance of negative predictions.

Area Under the ROC Curve (AUC-ROC)

The AUC-ROC metric is helpful for binary classification problems. It plots the true positive rate against the false positive rate, visually representing a model’s ability to distinguish between classes. AUC-ROC values closer to 1 indicate better model performance.

Mean Absolute Error (MAE)

Moving beyond classification, MAE is a metric used in regression tasks. It measures the average absolute difference between predicted and actual values. It gives us an idea of how far our predictions are from reality.

Root Mean Squared Error (RMSE)

Like MAE, RMSE is a regression metric focusing on the square root of the average squared differences between predicted and actual values. It penalises more significant errors more heavily than smaller ones.

Evaluation Metrics for ML Models

Testing ML models involves specific strategies tailored to their unique complexities. Let’s look at how to test machine learning models effectively, providing actionable steps to enhance their performance:

Understand Your Data

Before starting with testing, it’s essential to have a deep understanding of your dataset. Explore its characteristics, distribution, and potential challenges. This knowledge will help you design effective testing scenarios and identify potential pitfalls.

Split Your Data

Divide your dataset into training, validation, and testing sets. The training set is used to train the model, the validation set helps fine-tune hyperparameters, and the testing set assesses the model’s final performance.

Unit Testing for Components

Start by testing individual components of your ML pipeline. This includes checking data preprocessing steps, feature extraction methods, and model architecture. Verify that each component functions as expected before integrating them into the entire pipeline.

Cross-Validation

Utilise cross-validation to assess your model’s generalisation capabilities. Apply techniques like K-fold cross-validation, where the dataset is divided into K subsets, and the model is trained and evaluated K times, each time using a different subset for validation.

Choose Evaluation Metrics

Select appropriate evaluation metrics based on the nature of your problem. For classification tasks, precision, accuracy, recall, and F1-score are standard. Regression tasks often use metrics like MAE or RMSE.

Regular Model Monitoring

Machine learning models can degrade over time due to changes in data distribution or other factors. Regularly monitor your deployed models and retest them periodically to ensure they maintain their accuracy and reliability.

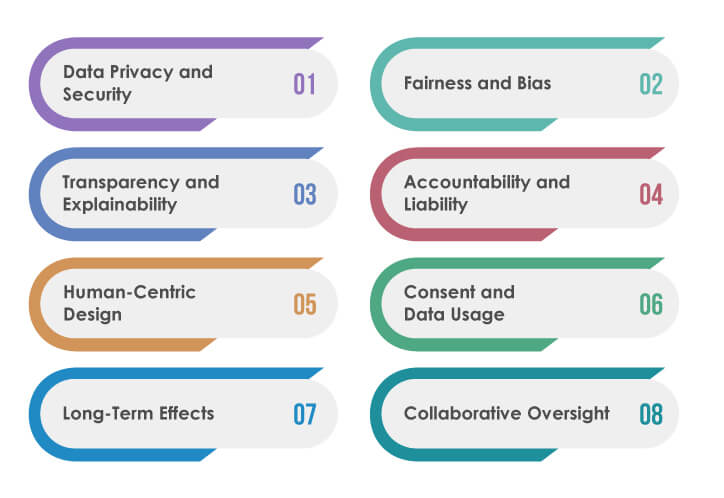

Ethical Considerations in ML Testing

With rigorous testing and refining ML models, it’s vital to consider the ethical implications that may arise. Let us explore the ethical considerations regarding ML testing, potential pitfalls, and how to ensure testing practices align with ethical rules

Data Privacy and Security

The data must be treated with the utmost care when testing ML models. Ensure that sensitive and personally identifiable information is appropriately encrypted to protect individuals’ privacy. Ethical testing respects the rights of data subjects and safeguards against potential data breaches.

Fairness and Bias

Examining whether they exhibit bias against certain groups is essential when testing ML models. Tools and techniques are available to measure and mitigate bias, ensuring that our models treat all individuals fairly and equitably.

Transparency and Explainability

ML models can be complex, making their decisions challenging to understand. Ethical testing includes evaluating the transparency and explainability of models. Users and stakeholders should understand how the model arrives at its predictions, fostering trust and accountability.

Accountability and Liability

Who is accountable if an ML model makes a harmful or incorrect prediction? Ethical ML testing should address questions of responsibility and liability. Establish clear guidelines for identifying parties responsible for model outcomes and implement mechanisms to rectify any negative impacts.

Human-Centric Design

ML models interact with humans, so their testing should reflect human-centred design principles. Consider the end-users needs, expectations, and potential impacts when assessing model performance. This approach ensures that models enhance human experiences rather than undermine them.

Consent and Data Usage

Testing often involves using real-world data, which may include personal information. Obtain appropriate consent from individuals whose data is used for testing purposes. Be transparent about data use and ensure compliance with data protection regulations.

Long-Term Effects

ML models are designed to evolve. Ethical testing should consider the long-term effects of model deployment, including how the model might perform as data distributions change. Regular testing and monitoring ensure that models remain accurate and ethical throughout their lifecycle.

Collaborative Oversight

Ethical considerations in ML testing should not be limited to developers alone. Involve diverse stakeholders, including ethicists, legal experts, and representatives from the affected communities, to provide a holistic perspective on potential ethical challenges.

Tools and Frameworks for ML Testing

Various ML testing tools and frameworks are available to streamline and enhance the testing process. Let’s look into some tools and frameworks that can help you navigate the complexities of ML testing effectively

TensorFlow

TensorFlow, developed by Google, is one of the most popular open-source frameworks for ML testing. It offers a wide range of tools for building and testing ML models. TensorFlow’s robust ecosystem includes TensorFlow Extended (TFX) for production pipeline testing, TensorFlow Data Validation for testing data in machine learning, and TensorFlow Model Analysis for in-depth model evaluation.

PyTorch

PyTorch is another widely used open-source ML framework known for its dynamic computation graph and ease of use. PyTorch provides tools for model evaluation, debugging, and visualisation. For example, the “torchvision” package offers various datasets and transformations for testing and validating computer vision models.

Scikit-learn

Scikit-learn is a versatile Python library that provides data mining, analysis, and machine learning tools. It includes a variety of algorithms and metrics for model evaluation, such as cross-validation and grid search for hyperparameter tuning.

Fairlearn

Fairlearn is a toolkit designed to assess and mitigate fairness and bias issues in ML models. It includes algorithms to reweight data and adjust predictions to achieve fairness. Fairlearn helps you test and address ethical considerations in your ML models.

Conclusion

Testing machine learning models is a systematic and iterative process that ensures your models perform accurately and reliably. Following this guide, you can identify and address potential issues, optimise performance, and deliver AI solutions that meet the highest standards. Remember that testing is not a one-time event. It’s an ongoing process that protects the effectiveness of machine learning models throughout their lifecycle.

Why Choose TestingXperts for ML Testing?

Ensuring the reliability, accuracy, and performance of ML models is crucial in the rapidly evolving structure of machine learning applications. At TestingXperts, we offer unparalleled ML Testing Services designed to empower businesses with robust and dependable AI-driven solutions. Partnering with TestingXperts means utilising our deep expertise, cutting-edge tools, and proven methodologies to validate and optimise ML models for success.

Industry-Leading Expertise

Our skilled professionals have a deep understanding of diverse ML algorithms, data structures, and frameworks, enabling them to devise comprehensive testing strategies tailored to your unique project requirements.

Comprehensive Testing Solutions

We offer end-to-end ML testing solutions encompassing every model development cycle aspect. From data preprocessing and feature engineering to model training and deployment, our services ensure a thorough examination of your ML system at every stage, enhancing accuracy and robustness.

Algorithmic Depth

Our ML testing experts possess an in-depth understanding of a wide array of ML algorithms and techniques. This knowledge enables us to uncover the complexities of your models and pinpoint potential vulnerabilities or inefficiencies, resulting in AI systems that excel in real-world scenarios.

Performance under Edge Cases

We rigorously evaluate how your ML models perform in edge cases, pushing the limits of their capabilities and uncovering potential weaknesses that could arise in unconventional situations.

Continuous Monitoring Solutions

TestingXperts offers continuous monitoring services, enabling you to keep a vigilant eye on your ML models even after deployment. This proactive approach ensures that your AI solutions adapt and remain performant as they encounter new data and challenges.

To know more about our ML testing services, contact our experts now.

Discover more

Get in Touch

Stay Updated

Subscribe for more info