- Role of Beta Testing in AI Development

- Key Reasons for Beta Testing AI Tools

- The Impact of Beta Testing on AI Reliability

- Challenges and Considerations in AI Beta Testing

- Effective Beta Testing Strategies for AI Tools

- Conclusion

- Why Choose TestingXperts for Beta Testing Your AI Tools?

Artificial Intelligence (AI) stands out as one of the most transformative advancements of our era. As businesses and developers eagerly incorporate AI into their products and services, delivering high-quality, reliable AI tools has become more critical. According to Forrester Prediction for 2024, 60% of companies will start using generative AI Technologies like Generative-AI. However, like all software, AI isn’t immune to glitches, inaccuracies, or other unforeseen challenges.

Role of Beta Testing in AI Development

A report by Gartner predicts that by 2024, 60% of data for AI will be synthetic to simulate reality, future scenarios, and de-risk AI, up from 1% in 2021. Beta testing serves as a reality check, ensuring that AI tools are technically sound but also user-friendly, adaptable, and effective in real-world conditions. This phase allows developers and testers to collect critical feedback, identify unforeseen issues, and understand user interactions with the AI system. It’s about making AI tools smart but also practical, intuitive, and reliable.

By involving a diverse group of beta testers, developers can ensure their AI tools are robust, unbiased, and inclusive, catering to a wide range of user needs and environments. This practice doesn’t just enhance the quality of AI tools and builds trust among users and stakeholders, paving the way for wider acceptance and adoption of AI technologies.

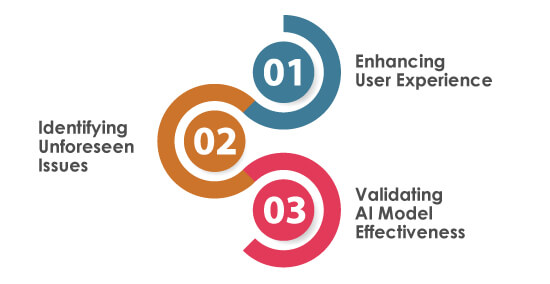

Key Reasons for Beta Testing AI Tools

Beta testing is not just about finding technical difficulties. It’s about refining the AI to align with its intended purpose and user needs. Let’s delve into why beta testing is indispensable for AI tools.

Enhancing User Experience

The primary objective of any AI solution is to meet user expectations. Beta testing plays a pivotal role in this. By exposing the AI system to real users in real scenarios, developers gain invaluable insights into how users interact with the system. This process reveals if the AI is intuitive, user-friendly, and capable of delivering the desired outcomes. Feedback gathered during beta testing enables developers to make necessary adjustments, enhancing the overall user experience. Such enhancements are crucial in fostering user satisfaction and loyalty.

Identifying Unforeseen Issues

Despite rigorous in-house testing, AI systems often encounter unforeseen issues when deployed in diverse real-world environments. These issues might range from minor glitches to significant operational challenges. Beta testing helps identify these hidden problems that might not have been apparent during earlier testing stages. It’s about detecting and resolving flaws impacting functionality, performance, or user satisfaction. Addressing these issues beforehand ensures a smoother launch and a more stable AI product.

Validating AI Model Effectiveness

Finally, beta testing is vital for validating the effectiveness of the AI model. AI tools are built on complex algorithms and datasets, and their effectiveness can vary significantly based on different external conditions and user interactions. Beta testing allows developers to verify if the AI model performs as intended in various real-world scenarios. It is an opportunity to assess the AI’s decision-making, adaptability, and learning capabilities. This validation is essential for immediate performance and the AI’s long-term evolution and improvement.

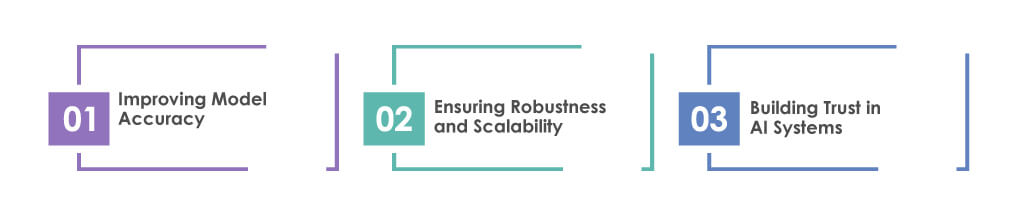

The Impact of Beta Testing on AI Reliability

Beta testing helps in addressing and improving model accuracy, system robustness, scalability, and user trust in AI applications. Let’s examine its specific impacts on enhancing the reliability of AI systems:

Improving Model Accuracy

Real-world data and scenarios often differ significantly from controlled test environments. Beta testing exposes AI systems to a broad spectrum of real-world inputs and interactions, highlighting discrepancies and areas for improvement. Doing so enables developers to fine-tune their models, ensuring the AI’s predictions and responses are as accurate and reliable as possible in diverse situations.

Ensuring Robustness and Scalability

Robustness and scalability are critical for AI systems, particularly when scaled for wider deployment. Beta testing helps validate whether these systems can handle increased loads and diverse operational conditions without compromising performance. This phase tests an AI system’s resilience against potential faults and ability to adapt to changing environments and user demands. Ensuring robustness and scalability during beta testing means building effective AI tools in a limited scope and being reliable and versatile in varied, real-world contexts.

Building Trust in AI Systems

Lastly, beta testing is crucial for building trust among users and stakeholders in AI systems. When users experience a well-functioning AI system that responds accurately and consistently during the beta phase, it builds confidence in the technology. Addressing issues and incorporating feedback during beta testing demonstrates a commitment to excellence and user satisfaction.

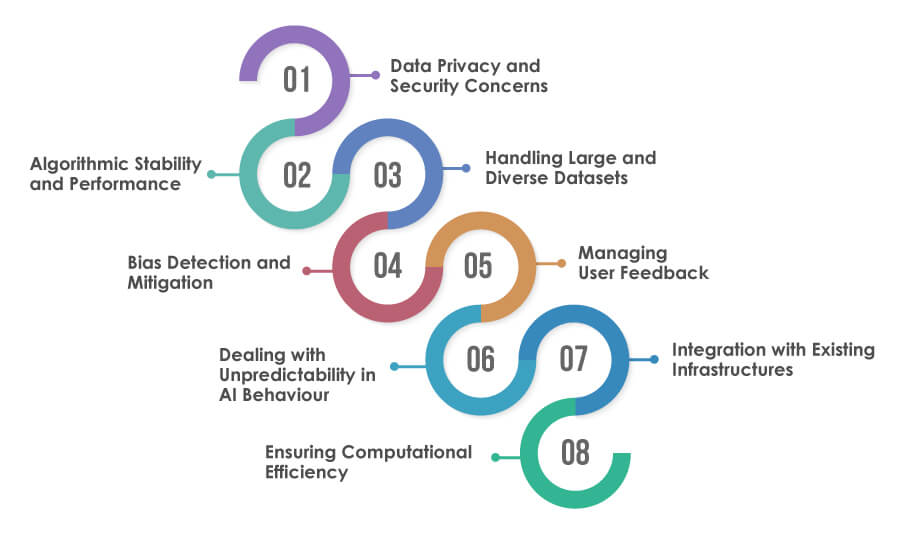

Challenges and Considerations in AI Beta Testing

Beta-testing AI tools present unique challenges and considerations that require careful planning. Addressing the following challenges is essential for conducting effective and reliable beta testing, creating the way for robust and trustworthy AI tools

Data Privacy and Security Concerns

Protecting data privacy and security is critical, especially as AI systems often require large, sensitive datasets. Ensuring compliance with data protection regulations and implementing robust security measures to prevent breaches is essential.

Algorithmic Stability and Performance

Ensuring that AI algorithms remain stable and perform consistently under different conditions is a technical challenge. This involves dealing with issues like model drift, where the performance of the AI model degrades over time due to changes in the underlying data patterns.

Handling Large and Diverse Datasets

AI systems require extensive and varied datasets for effective training and testing. Managing these large datasets, ensuring their quality and representativeness, and processing them pose significant technical hurdles.

Bias Detection and Mitigation

AI systems can learn and perpetuate biases in their training data. Identifying and mitigating these biases to ensure the fairness and impartiality of AI tools is a significant technical and ethical challenge.

Managing User Feedback

Collecting and managing user feedback during beta testing is crucial for identifying issues and improving AI systems. It can be challenging due to its volume and variety.

Dealing with Unpredictability in AI Behaviour

AI systems may exhibit unpredictable behaviour due to their learning algorithms. This unpredictability is a significant challenge in ensuring the system’s reliability and safety, particularly in unfamiliar scenarios.

Integration with Existing Infrastructures

Integrating AI tools into existing technological infrastructures can be complex. This challenge includes ensuring compatibility with current systems, managing resource allocation efficiently, and ensuring the AI solution scales appropriately with the infrastructure.

Ensuring Computational Efficiency

AI models, especially those based on deep learning, can be computationally intensive. Optimising these models to run efficiently, particularly in real-time applications or on limited-resource devices, is a critical technical challenge.

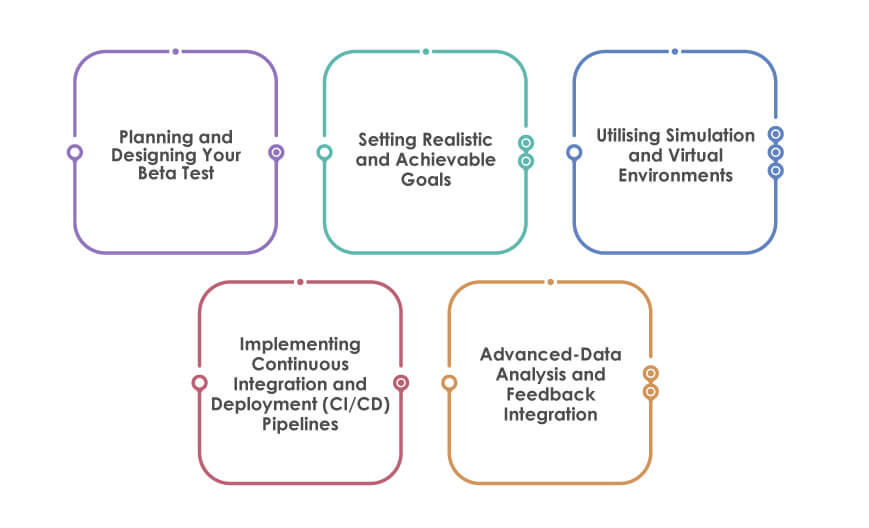

Effective Beta Testing Strategies for AI Tools

By adopting strategic approaches, organisations can maximise the benefits of beta testing, leading to AI tools that are not only innovative but also reliable and user-friendly. Let’s explore the key strategies that can make beta testing of AI tools more effective.

Planning and Designing Your Beta Test

It involves defining clear objectives for the test, understanding the specific aspects of the AI solution that need evaluation, and determining the scope and duration of the testing period. A well-planned beta test will cover various scenarios and use cases, ensuring a comprehensive evaluation of the AI system. Data collection and analysis planning is also essential, as this information will improve the AI solution.

Setting Realistic and Achievable Goals

These goals should align with the overall objectives of the AI project and should be specific, measurable, and time-bound. Clear goals help maintain focus throughout the testing process and provide a framework for evaluating the success of the beta test.

Utilising Simulation and Virtual Environments

In beta testing AI tools, simulation and virtual environments are pivotal, especially for areas like autonomous driving or robotics where real-world testing is risky. These simulated settings allow AI systems to be tested under diverse and controlled conditions, offering insights into their performance in various scenarios. Scenario modelling further enhances this approach by creating complex situations that are hard to replicate in real life, enabling AI to adapt to a broader range of challenges. This method ensures that AI tools are thoroughly tested and optimised for real-world complexities before deployment.

Implementing Continuous Integration and Deployment (CI/CD) Pipelines

Implementing CI/CD pipelines in AI beta testing ensures a more efficient and error-resistant development process. Automated testing within these pipelines helps consistently evaluate new code updates and maintain the integrity of the AI solution. Rollback mechanisms are crucial here, allowing for quick reversals if an update introduces critical issues, thereby protecting the stability and continuity of the beta-testing phase.

Advanced-Data Analysis and Feedback Integration

Data analytics tools, including AI-powered analytics, help uncover patterns and anomalies in testing data, offering deeper insights. Real-time feedback allows immediate reporting of issues within the application, enabling developers to address them on time. This approach not only enhances the efficiency of the beta testing process but also significantly contributes to the continuous improvement of the AI solution.

Conclusion

The beta testing process not only uncovers and addresses unforeseen issues but also significantly enhances user experience and trust in AI systems. Moreover, adopting a culture of continuous improvement within AI development, guided by the insights gained from beta testing, is essential. This approach ensures that AI tools are not only robust and reliable at the time of their release but also remain relevant and efficient in an ever-evolving technological landscape. Ultimately, the success of AI tools in the real world depends on thorough and insightful beta testing, highlighting its importance in AI development.

Why Choose TestingXperts for Beta Testing Your AI Tools?

• Leveraging custom-designed frameworks that align precisely with your AI’s unique requirements, ensuring comprehensive coverage and insightful results.

• Our team bring specific expertise in AI, understanding the intricacies of machine learning, neural networks, and data analytics.

• We prioritise your data’s privacy and security, adhering to stringent global standards and employing state-of-the-art security measures to safeguard sensitive information.

• Recognising the value of transparency, we establish clear, customised communication channels that keep you informed and involved at every stage of the beta test.

To know more, contact our QA experts now.

Discover more

Get in Touch

Stay Updated

Subscribe for more info