- Artificial Intelligence Act of the European Union (EU AI Act)

- Who Must Comply with the EU AI Act?

- Key Requirements & Prohibited Practices of High-Risk AI

- Business Impact of EU AI Act

- Why Partner with Tx to ensure Compliance with AI Regulations?

- Summary

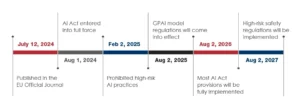

The European Union Artificial Intelligence Act (EU AI Act) entered full force on August 1, 2024. It was a historic milestone in the world of regulatory standards for AI. This regulation focuses on establishing a resilient framework for AI development and release and ensuring it aligns with human rights and social values. The act aims to impose stricter measures on high-risk AI systems, especially those used in law enforcement and employment. It also prohibits AI systems that promote social scoring and police profiling. The EU regulatory bodies are setting a new stage for global AI governance standards.

Artificial Intelligence Act of the European Union (EU AI Act)

The EU AI Act will govern the development and use of artificial intelligence technology in the European Union. The act follows a risk-based approach, applying different rules to AI monitoring based on risk level. It is also considered the first comprehensive AI regulatory framework that entirely prohibits some AI use cases. It also implements the strictest governance, risk management, and transparency practices to make AI space more secure and approachable. The act also creates rules for general-purpose AI models (such as IBM Granite, Meta Llama 3, etc.). Similar to the EU’s GDPR in 2018, the EU AI Act will also determine to what extent AI can positively impact users’ lives.

Who Must Comply with the EU AI Act?

The European Union Artificial Intelligence Act applies to everyone involved in the AI value chain (providers, authorized representatives, manufacturers, deployers, distributors, and importers). Let’s look in detail to understand better who comes under the umbrella of the EU AI Act:

AI System Providers

Any company or developer that develops, markets, or sells AI systems in the European Union region, even if they are based outside the EU.

AI System Users

Businesses or individuals integrating AI technologies into their operations, especially in highly regulated sectors like law enforcement, healthcare, and finance.

Distributors and Importers

Entities that launched their AI systems in the EU market, ensuring EU regulations are met.

Non-EU Businesses:

Businesses outside the EU must comply if their AI systems, such as AI-driven services or digital platforms, affect people within the EU region.

How Does the EU AI Act Works?

This Act classifies AI solutions into four risk categories:

- Unacceptable risk consists of AI applications outright banned in EU AI compliance. For example, social scoring, real-time biometric surveillance, etc.

- High risk consists of AI solutions used in critical areas such as employment, credit scoring, and medical devices. These AI solutions must meet strict security, transparency, and accuracy requirements to function in the EU region.

- Limited risk consists of AI systems requiring clear user disclosures, such as deepfake generators and chatbots.

- Minimal risk comprises regular AI applications like spam filters without significant obligations.

- Prohibiting certain AI practices from posing unacceptable risks

- Defining standards for developing and releasing high-risk AI solutions

- Defining and implementing rules for general-purpose AI models

- AI systems that classify/evaluate individuals based on their social activities or behavior cause societal discrimination. These social scoring systems are termed unjustified in the behavioral context.

- Emotional recognition systems at educational institutes and the workplace, except for medical or safety purposes.

- AI solutions that exploit individual vulnerabilities like old age or disabilities.

- Biometric systems that recognize users based on sensitive characteristics.

- Use of real-time biometric recognition systems in public areas by law enforcement if they don’t have permission from a judicial or other administrative authority.

- Facial images scraping from the internet or CCTV for facial recognition.

- Non-compliance with prohibited AI practices would result in a fine of up to €35,000,000 or 7% of the annual turnover, whichever is greater.

- Non-compliance with the high-risk AI system requirements would result in a fine of up to €15,000,000 or 3% of the annual turnover, whichever is greater.

- Distributing incorrect, misleading, or incomplete information about AI solutions to the authorities would result in a fine of up to €7,500,000 or 1% of the annual turnover, whichever is greater.

- As AI systems are categorized based on their potential risk level, businesses must leverage different compliance measures depending on the risk level. For example, stricter regulations are mandatory for “high-risk” AI systems in areas like healthcare or employment.

- Businesses must implement a procedure to explain how their AI systems function and provide users with information about the decision-making process.

- Specific AI applications considered “unacceptable risk” are banned outright, including those that manipulate human behavior or exploit vulnerable groups.

- Companies must invest in developing and maintaining robust AI governance frameworks, conducting risk assessments, and documenting compliance with the Act’s requirements.

- Adhering to the AI Act can enhance a company’s reputation as a responsible AI developer.

- While some may say the Act might stop or slow innovation, others believe it will create a responsible AI development environment by setting clear ethical guidelines for AI applications.

- Conducting AI model assessments to ensure they meet compliance, accuracy regulations, and unbiasedness. End-to-end testing will help identify and mitigate any underlying biases and ensure your product operates ethically and effectively.

- Implementing robust data governance practices to ensure the security and quality of the data used for training AI systems. This will help you maintain accuracy and trust in AI systems.

- Maintaining the effectiveness of AI systems with comprehensive continuous monitoring and ensuring they remain updated with evolving regulatory requirements to prevent potential issues.

- Performing audits and testing to check AI systems follow industry-specific regulations, thus reducing the risk of regulatory penalties.

Key Requirements & Prohibited Practices of High-Risk AI

The act regulates AI systems based on risk level, which refers to the severity and likelihood of the expected harm. It includes:

If the AI solution does not fall under any such categories, it will be marked as a minimal risk category and not subject to requirements under the act. However, it must still meet transparency requirements and fulfill existing laws’ requirements. The EU AI Act has listed certain AI practices that pose unacceptable risk levels. For instance, creating or utilizing AI solutions that manipulate logical thinking and guide people into making harmful choices (which is impossible in normal circumstances) fall under prohibited AI practice. Some of the banned AI practices include:

Non-Compliance Fine

Non-compliance with the European Union Artificial Intelligence Act would result in hefty fines for the organizations. The details are given below:

Business Impact of EU AI Act

The EU AI Act requires businesses to implement robust governance practices around their AI systems, which may potentially increase development costs and compliance burdens. On the other hand, it will also promote trust and transparency in AI applications. Businesses would benefit from greater market access and improved consumer confidence within the EU market.

Non-compliance can result in substantial fines, making it crucial for companies to understand and adhere to the Act’s provisions. Let’s take a close look at the key impacts of the EU AI Act on businesses:

Why Partner with Tx to ensure Compliance with AI Regulations?

Tx offers comprehensive AI consultancy and QA services to help you seamlessly implement AI solutions and governance practices per the industry regulations. Our expertise includes:

Summary

The EU AI Act establishes a structured regulatory framework for AI governance, ensuring ethical development and compliance. It classifies AI systems into four risk levels, with stricter rules for high-risk applications like healthcare and law enforcement. Non-compliance can lead to significant penalties, making it crucial for businesses to implement robust governance practices. Businesses must assess their AI systems, ensure transparency, and adhere to evolving standards to operate within the EU market while maintaining responsible AI development practices. To know how Tx can help, contact our AI experts now.

Discover more

Get in Touch

Stay Updated

Subscribe for more info