ETL stands for Extract, Transform, Load, which refers to the process of extracting data from various sources, transforming it into a consistent and usable format, and loading it into a target database or data warehouse. It plays an important role in data integration and management, enabling organizations to consolidate and analyze large volumes of data from disparate systems.

The extraction phase involves retrieving data from multiple sources such as databases, files, APIs, or web scraping. In the transformation phase, the extracted data is cleaned, validated, and standardized to ensure consistency and accuracy. Finally, in the loading phase, the transformed data is loaded into a target system for analysis and reporting. ETL processes are essential for organizations to make informed business decisions, improve data quality, and drive meaningful insights from their data assets.

Importance of Data Quality Testing in ETL

Data Quality Testing in ETL (Extract, Transform, Load) processes is of paramount importance for several reasons.

a. Data quality directly impacts the accuracy and reliability of business insights derived from the data. By conducting thorough data quality testing, organizations can ensure that the data being processed and transformed during ETL is correct, complete, and consistent. This ensures that the resulting data is reliable, enabling informed decision-making and accurate reporting.

b. Data quality testing helps identify and rectify data errors, anomalies, and inconsistencies early in the ETL process. This ensures that data issues are addressed before they propagate throughout the system and impact downstream processes and analytics. Timely detection and resolution of data quality issues improve the overall integrity and reliability of the data.

c. Data quality testing in ETL helps maintain compliance with regulatory requirements and industry standards. In industries such as finance, healthcare, and retail, where sensitive and confidential data is processed, ensuring data accuracy and compliance is crucial. Data quality testing ensures adherence to data governance policies, privacy regulations, and industry-specific data standards.

Moreover, data quality testing enhances data integration efforts by enabling seamless data flow across various systems and databases. By validating data formats, resolving inconsistencies, and ensuring data compatibility, organizations can achieve successful data integration and avoid data silos.

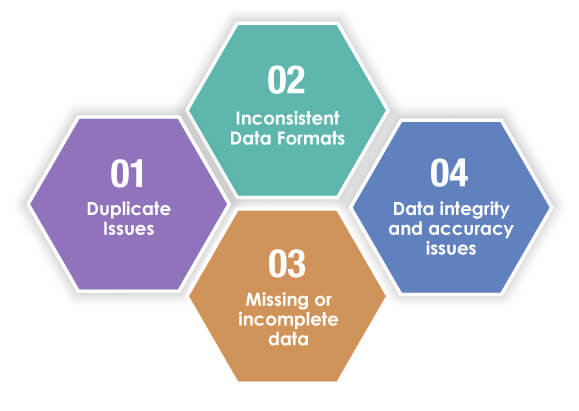

Common Data Quality Issues in ETL

During the Extract, Transform, Load (ETL) process, where data is extracted from various sources, transformed, and loaded into a target system, several common data quality issues can arise. These issues can have significant consequences on the accuracy, completeness, and reliability of the data being processed.

Duplicate records, inconsistent data formats, missing or incomplete data, and data integrity and accuracy issues are among the frequent challenges encountered in ETL. Addressing these data quality issues is essential to ensure the integrity of the data, improve decision-making, and drive meaningful insights from the ETL process. In this article, we will explore these common data quality issues in detail, their impact on business operations, and methods to mitigate and overcome them effectively.

Duplicate Issues

Data quality testing in ETL plays a crucial role in identifying and addressing duplicate records, which can significantly impact the accuracy and reliability of data. To check for duplicate records, various techniques are deployed during the testing process. One common approach is to perform a comparison of key fields or attributes within the dataset. This involves comparing specific fields such as customer IDs, product codes, or unique identifiers to identify any identical values.

Another method is to utilize data profiling techniques, which involve analyzing the statistical properties of the data to detect patterns indicative of duplicates. Additionally, advanced algorithms and matching algorithms can be employed to identify potential duplicates based on similarity scores and fuzzy matching. By implementing these testing methodologies, organizations can detect and resolve duplicate records, ensuring data integrity and improving the overall quality of the data used in the ETL process.

Inconsistent Data Formats

Data quality testing in ETL is essential for identifying and rectifying inconsistent data formats, which can undermine the integrity and reliability of the data being processed. During the testing process, various techniques are deployed to check for inconsistencies in data formats. One approach is to validate data against predefined format rules or data schema specifications.

This involves verifying that the data conforms to the expected format, such as date formats, currency formats, or alphanumeric patterns. Additionally, data profiling techniques can be used to analyze the structure and content of the data, highlighting any variations or anomalies in data formats.

Data quality testing may also involve standardizing and transforming data into a consistent format, ensuring uniformity across different sources and systems. By addressing inconsistent data formats through testing, organizations can ensure data uniformity, improve data integration efforts, and enhance the overall quality and usability of the data in the ETL process.

Missing or incomplete data

Data quality testing in ETL is instrumental in detecting and resolving issues related to missing or incomplete data, which can significantly impact the accuracy and reliability of data analysis and reporting. Various techniques are deployed during data quality testing to identify missing or incomplete data. One approach is to perform data profiling, which involves analyzing the data to identify patterns and anomalies.

Through this process, testers can identify missing values, null values, or incomplete data fields. Additionally, data validation checks can be performed to ensure that mandatory fields are populated and that all required data elements are present. Techniques such as record count validation and data completeness checks can also be employed to identify any gaps or missing data in the dataset. By addressing missing or incomplete data through testing, organizations can improve the data’s completeness and reliability, enabling accurate decision-making and reliable insights from the ETL process.

Data integrity and accuracy issues

Data quality testing in ETL is crucial for identifying and resolving data integrity and accuracy issues, ensuring the reliability and trustworthiness of the data being processed. Various techniques are deployed to check for data integrity and accuracy during testing. One common approach is to perform data validation checks, comparing the extracted data with the source data to ensure consistency.

This involves verifying the accuracy of key fields, such as numeric values, codes, or identifiers. Data profiling techniques can also be utilized to analyze the statistical properties of the data and identify any outliers or anomalies that may indicate integrity issues.

Additionally, data reconciliation processes can be implemented to ensure that the transformed and loaded data accurately reflects the source data. By conducting comprehensive data quality testing, organizations can identify and rectify data integrity and accuracy issues, enhancing the overall quality and reliability of the data used in the ETL process.

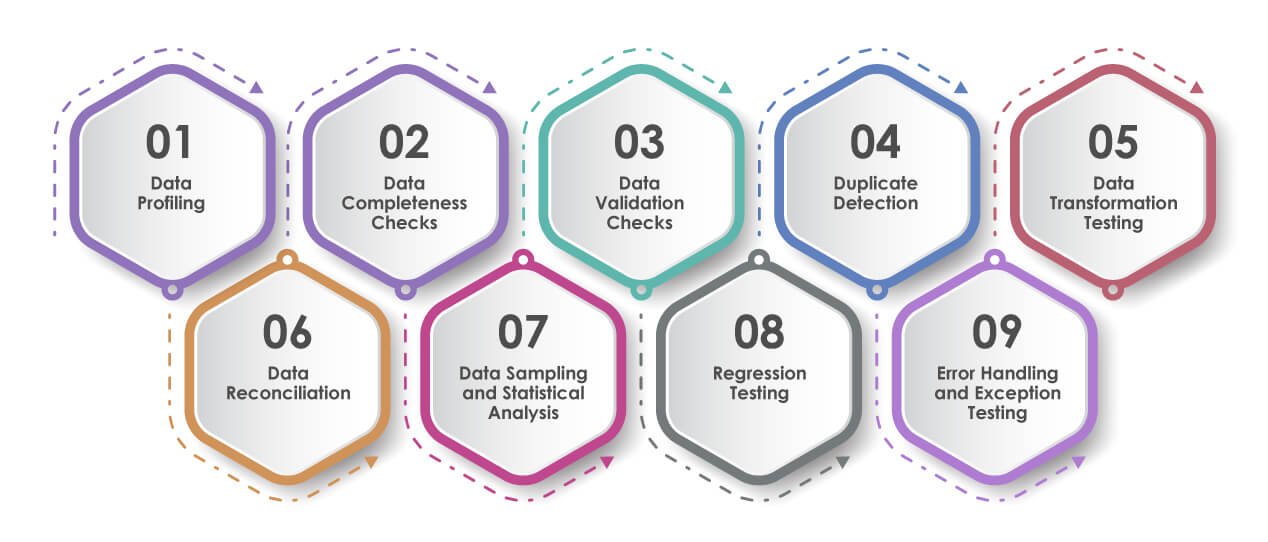

Methods and Techniques for Data Quality Testing in ETL

Data Profiling:

Data profiling involves analyzing the structure, content, and quality of the data to identify anomalies, patterns, and inconsistencies. This technique helps in understanding the data’s characteristics and assessing its quality.

Data Completeness Checks:

This technique ensures that all required data elements are present and populated correctly. It involves verifying if mandatory fields have values and checking for missing or null values.

Data Validation Checks:

Data validation involves verifying the accuracy and integrity of data by comparing it against predefined business rules, data formats, or reference data. It ensures that the data conforms to the expected standards and rules.

Duplicate Detection:

Duplicate records can affect data accuracy and integrity. Techniques like record matching, fuzzy matching, or deterministic matching are used to identify duplicate data entries and resolve them.

Data Transformation Testing:

Data transformation is a critical step in the ETL process. Testing involves verifying that the data is transformed correctly, adhering to the defined rules, and maintaining its integrity and accuracy.

Data Reconciliation:

Data reconciliation compares the data in the source and target systems to ensure that the data has been accurately transformed and loaded. It helps identify any discrepancies or inconsistencies between the two datasets.

Data Sampling and Statistical Analysis:

Sampling techniques are used to select a representative subset of data for testing. Statistical analysis helps identify patterns, outliers, and anomalies in the data, providing insights into its quality and accuracy.

Regression Testing:

Regression testing ensures that changes or updates in the ETL processes do not introduce data quality issues. It involves retesting the ETL workflows to ensure that existing data quality standards are maintained.

Error Handling and Exception Testing:

This technique involves testing the handling of errors and exceptions during the ETL process. It verifies that error messages are captured, logged, and appropriate actions are taken to handle them effectively.

By employing these methods and techniques, organizations can perform comprehensive data quality testing in ETL, ensuring the accuracy, completeness, consistency, and integrity of the data being processed. This ultimately leads to reliable insights, informed decision-making, and improved business outcomes.

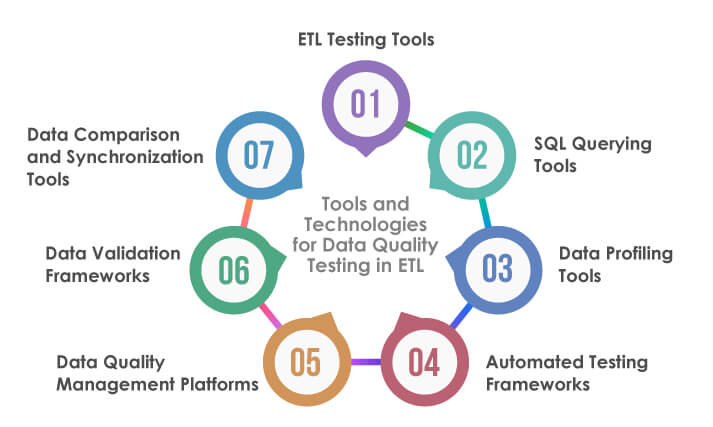

Tools and Technologies for Data Quality Testing in ETL

ETL Testing Tools:

Several dedicated ETL testing tools are available in the market that provide specific features and capabilities for data quality testing. Examples include Informatica Data Quality, Talend Data Quality, and IBM InfoSphere Information Analyzer.

SQL Querying Tools:

SQL querying tools such as SQL Server Management Studio, Oracle SQL Developer, or MySQL Workbench are commonly used for data validation and verification. They enable writing complex SQL queries to validate data accuracy, perform data integrity checks, and compare data across different sources.

Data Profiling Tools:

Data profiling tools help in analyzing the structure, content, and quality of the data. They provide insights into data statistics, patterns, and anomalies, enabling data quality assessment. Examples include Talend Data Profiling, Trifacta Wrangler, and Dataedo.

Automated Testing Framework:

Utilizing automated testing frameworks such as Selenium, Apache JMeter, or TestComplete can streamline data quality testing in ETL. These frameworks facilitate the automation of repetitive testing tasks, allowing for efficient and comprehensive testing of data integrity and accuracy.

Data Quality Management Platforms:

Data quality management platforms like Informatica Data Quality, Talend Data Quality, or IBM InfoSphere QualityStage offer comprehensive features for data quality testing in ETL. They provide capabilities for data profiling, data cleansing, deduplication, and validation.

Data Validation Frameworks:

Building custom data validation frameworks using programming languages like Python, Java, or R can be effective for data quality testing. These frameworks allow for customized data validation rules, complex transformations, and comparison of data across multiple sources.

Metadata Management Tools:

Metadata management tools help in capturing and managing metadata associated with the data in the ETL process. They enable tracking data lineage, data dependencies, and impact analysis, which can aid in data quality testing.

Data Comparison and Synchronization Tools:

Tools like Beyond Compare, Redgate SQL Data Compare, or Devart Data Compare provide features to compare and synchronize data between source and target systems. These tools assist in identifying inconsistencies and discrepancies during data quality testing.

Data Visualization Tools:

Data visualization tools like Tableau, Power BI, or QlikView help in visually representing data quality issues, patterns, and trends. They enable data analysts and testers to gain insights into data quality through interactive visualizations.

By leveraging these tools and technologies, organizations can enhance their data quality testing efforts in the ETL process, ensuring the accuracy, completeness, and reliability of the data being processed.

Conclusion

In conclusion, data quality testing plays a vital role in the ETL process, ensuring the accuracy, completeness, consistency, and reliability of the data being transformed and loaded. It helps organizations identify and rectify data quality issues, preventing incorrect or incomplete data propagation throughout the data pipeline.

By implementing effective data quality testing methods, such as data profiling, validation checks, duplicate detection, and reconciliation, organizations can ensure that their data meets the required standards and adheres to business rules and regulations.

This, in turn, leads to reliable insights, informed decision-making, improved operational efficiency, and enhanced customer satisfaction. With the increasing importance of data-driven decision-making, organizations must prioritize data quality testing in their ETL processes to maintain high-quality data and gain a competitive edge in today’s data-driven landscape.

How TestingXperts can help in leveraging data quality in ETL

TestingXperts can help organizations leverage data quality in the ETL process by providing their expertise in software testing and quality assurance. They can collaborate with organizations to develop a comprehensive test strategy and plan specifically tailored for data quality testing in ETL. TestingXperts can perform data profiling activities to gain insights into the data, identify data quality issues, and define data quality rules.

They can also design and execute data validation checks to verify data accuracy, integrity, and completeness. With their experienced testing professionals, TestingXperts can execute data quality tests, analyze the results, and provide comprehensive reports highlighting identified data quality issues and recommended resolutions.

They can also help organizations establish data governance practices and implement continuous improvement measures to ensure ongoing data quality management. By leveraging TestingXperts’ expertise, organizations can enhance their data quality in the ETL process, leading to reliable and trustworthy data for better decision-making and business outcomes.

Discover more

Get in Touch

Stay Updated

Subscribe for more info